Measuring Quality of Grammars for Procedural Level Generation

Updated Wednesday February 7th 2019

Improving Debuggers for Procedural Game Levels

Quinten Heijn and Riemer van Rozen participated in the International Conference on the Foundations of Digital Games (FDG), in Malmö, Sweden in August 2018.

Their talk at the Procedural Content Generation in Games (PCG) workshop, a part of FDG, was for a paper on Measuring Quality of Grammars for Procedural Level Generation [1]. It was written in the context of Quinten’s MSc project, which was in collaboration with Joris Dormans of Ludomotion. This work addresses the challenge of improving the quality of procedural level generation. Unlike other works that focus on the output of content generators, this work analyzes root-causes of quality issues in the source code (grammar rules) of the generators.

Following a very interesting related topic presented by Thomas Smith on generating graphs for Zelda-style levels via Answer-Set Programming, Riemer gave an introduction into Software Evolution that framed the talk. According to Antonios Liapis, Joris was virtually present too.

Feels like Joris & @PlayUnexplored have a special virtual seat in this session. 2nd talk is on judging code quality of grammar-based dungeon generation. It's a Software Evolution approach for root-cause analysis in PCG, cool for explainable AI for white-box PCG? Shoutout @jichenz pic.twitter.com/udCCbDA9aL

— Antonios Liapis (@SentientDesigns) August 7, 2018

Quinten presented the main contributions of the paper, showing movies of LudoScope Lite (LL). LL is a prototype level generator and analyzer (currently limited to tile maps) built by Quinten using Rascal, a meta-programming language and a language work bench. The prototype is available on Github.

In good company, we see Rafaël Bidarra on the left and Julian Togelius on the right.

.@VisKnut explaining declarative game level properties for measuring the quality of grammars for procedural level generation @PcgWorkshop #FDG18 pic.twitter.com/JVxAVyMSRO

— Riemer van Rozen (@rvrozen) August 7, 2018

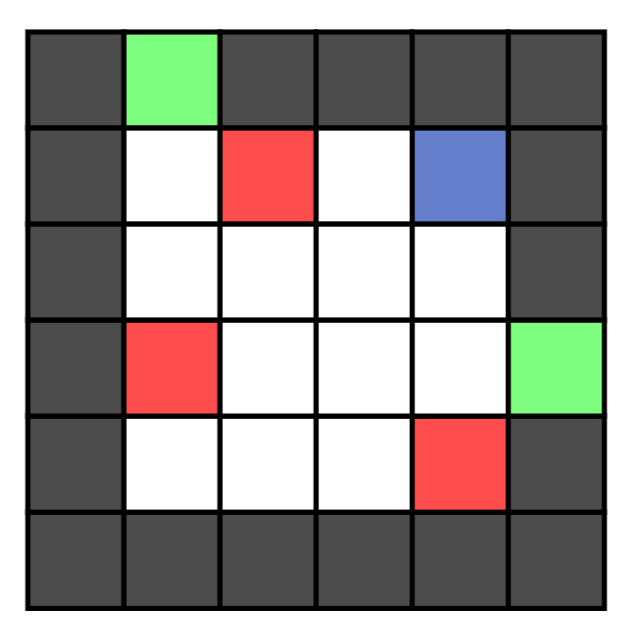

We explain LL using a simple example: a game level of a dungeon room. Below, we see a tile map on the left and its running game instance on the right. Players have to traverse the room without being burnt by fire pillars. They can use water from a pond to extinguish the flames.

| tile map | game |

|---|---|

|

LL demonstrates two techniques that developers can use to analyse the root causes of quality issues in generated levels. First, the Metric of Added Detail (MAD) raises flags for grammar rules that remove detail with respect to their position in the transformation pipeline. Second, Specification Analysis Reporting (SAnR), analyzes level generation histories against level properties and reports problematic grammar rules.

The movie below demonstrates how a pipeline of three stages (consisting of modules m1..m3 that each contain grammar rules) generates a level conforming to each of its level properties.

The movie below demonstrates how SAnR helps find broken levels, which facilitates debugging and can be used to prevent bad levels from being generated.

Conclusions

Specification Analysis Reporting (SAnR) in particular may prove very useful, since debugging grammar rules in level generation histories is an important requirement for improving the quality of grammar-based content generators.

Are MAD and SAnR odd names? Well, yes, but simultaneously debugging grammar rules and potentially countless levels feels mad, and therefore becoming saner must be urgent. In that light, the results game developers achieve using generative grammars are not just good, but astonishing. This cutting-edge technology explores the limits of procedural content generation, and it deserves the attention of researchers who can help developers push those limits forward.

- Symposium. In case you wish to learn more, Quinten will give a talk at the Symposium on Live Game Design in Amsterdam on February 27th.

Bibliography

- R. van Rozen and Q. Heijn. Measuring Quality of Grammars for Procedural Level Generation. Chapter revision. In Proceedings of the 13th International Conference on Foundations of Digital Games, FDG, as part of the 9th Workshop on Procedural Content Generation, PCG, Malmö, Sweden, August 7–10, 2018. [pdf]

- Q. Heijn. Improving the Quality of Grammars for Procedural Level Generation: A Software Evolution Perspective. MSc Thesis. University of Amsterdam, Master of Software Engineering, August 30, 2018. [pdf]

- R van Rozen and Q. Heijn. Measuring Quality of Grammars for Procedural Level Generation. Presentation slide deck. In Proceedings of the 13th International Conference on Foundations of Digital Games, FDG, as part of the 9th Workshop on Procedural Content Generation, PCG, Malmö, Sweden, August 7–10, 2018. [pdf]

Proceedings. The full proceedings (the collection of papers) of FDG 2018 can be found here at dblp [url]. The papers are available on the ACM digital library [url]. In case you don’t have access, most authors share a pre-print you might find via Google Scholar.

Thanks. The city council of Malmö warmly welcomed the conference participants to a reception and a dinner at the historical city hall, as recorded by the conference chair Jose Font. We thank the organizers for a great conference!

#fdg18 gala dinner at the Town Hall thanks to @malmostad 's support. pic.twitter.com/hSTAD7IKZx

— Jose Maria Font (@erfont) August 9, 2018

Finally, we thank Joris Dormans for his continued collaboration with us in applied research on PCG and Automated Game Design.